Content

- 1 On the importance of running tests

- 2 How to use the ready-made split-testing solution by Facebook

- 3 How does automatic split testing function?

- 4 How to launch a split test

- 5 What variables can be tested?

- 6 Can I edit the ads launched in the split-testing mode?

- 7 How to evaluate the results of a split test?

- 8 What the Facebook split testing has that the manual testing doesn’t

- 9 What do advertisers who actively use split testing say about it?

Running tests is a must, if you want to get the high performance results. Tests allow you to move forward, while using the actual data from your customers, rather than the ideas from the specialists or business owners.

You can use a number of services to automate your tests in advertising, like AdEspresso. At the same time, you always have an option to run your tests manually and keep the record in a spreadsheet.

Tests can differ by the number of variables. You can run either a test with multiple tested parameters, or an A/B test.

Facebook has recently invited everyone to use its updated solution for running A/B tests, which is called “split testing.” The tool still raises many doubts among the advertisers though. While the veterans of ad campaigns remain true to their testing strategies, Facebook further tweaks its solution to attract more advertisers to it.

Manual or automatic tests? We want to let you make your own conclusions. So here is our description of how the Facebook split-testing tool works, with our impressions and the comments from the fellow advertisers.

On the importance of running tests

Advertising is the work with real people in dynamics. Today, you might think you have found the right path to the hearts of people, and tomorrow, everything turns upside down.

Any professional, before pouring lots of money onto largescale campaigns, drafts various theories for future tests. The first numbers you receive – this is where you start feeling the ground under your feet.

Let’s take a basic thing for example, like selling dishes. Your potential customers can range from catering services to private cafes, food courts in supermarkets, chefs and housewives. Their customer needs can be anywhere from the practical usefulness of the product to its visual beauty.

Preliminary, all your assumptions are based on data from the past, the results of researches and surveys, the figures from the older campaigns. After the testing, you get more confident in your expectations for conversions from any given audience.

The audience and the offers are just the beginning of what you can test. Testing helps you find the unexpected insights.

Don’t forget that your first-priority task in advertising is to grab the attention with your message. Blindness and irritation are both your enemies.

Median ads COO Victor Filonenko shares a story from his experience:

“Quite often, the results I get differ from my expectations at the start. I remember the project, where we targeted young people with an interest in video games and got the highest performance from creatives with the errors in texts.”

How can you win over someone’s attention and not overstep any lines? Run the tests.

How to use the ready-made split-testing solution by Facebook

If you run ads on Facebook, then you’re invited to use its auto split-testing. It’s simple and it’s done for you. Sounds great, right? Well, if you’re an adept of running the tests on your own, you may be missing an ability to make your testing more tactical.

Split testing from Facebook is a tool for testing variants with one variable on autopilot. It’s designed to make your experience with the Facebook ads as simple as possible. The simpler the job, the more users can do it. Plus, the whole advertising activity is concentrated within the platform.

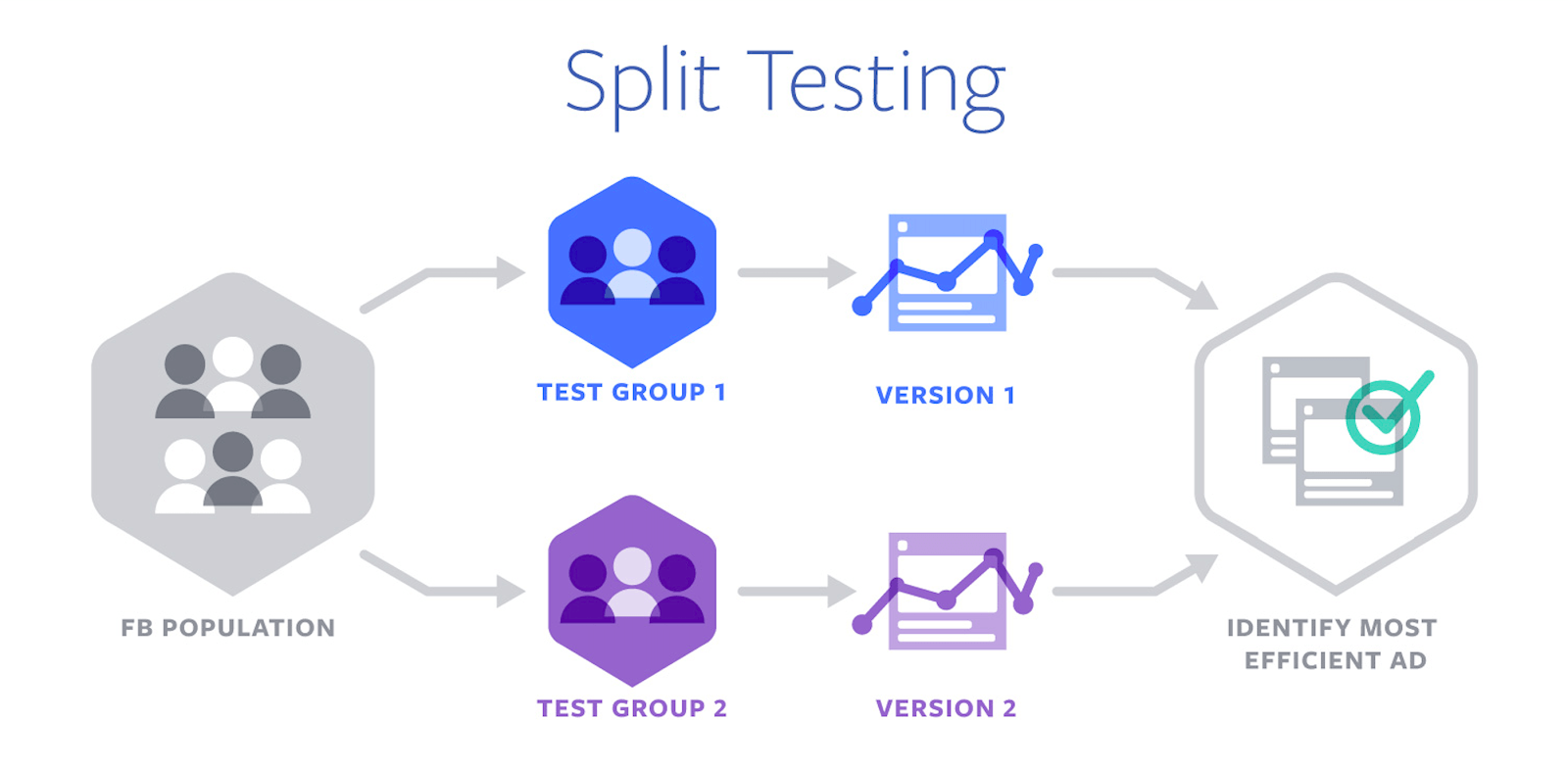

How does automatic split testing function?

- The audience is divided into groups with no overlap. The potential reach is randomly divided between the ad sets. Different variants are seen by different people.

- All ad sets have each just one variable, an item you’d like to test. The rest must be identical.

- The effectiveness of the ad sets is measured by the selected objective. The ad sets are compared by their performance, and you get a winner.

- You receive a notification of the test’s completion, and you can use the template of the winner option to launch an ad campaign or the next test.

How to launch a split test

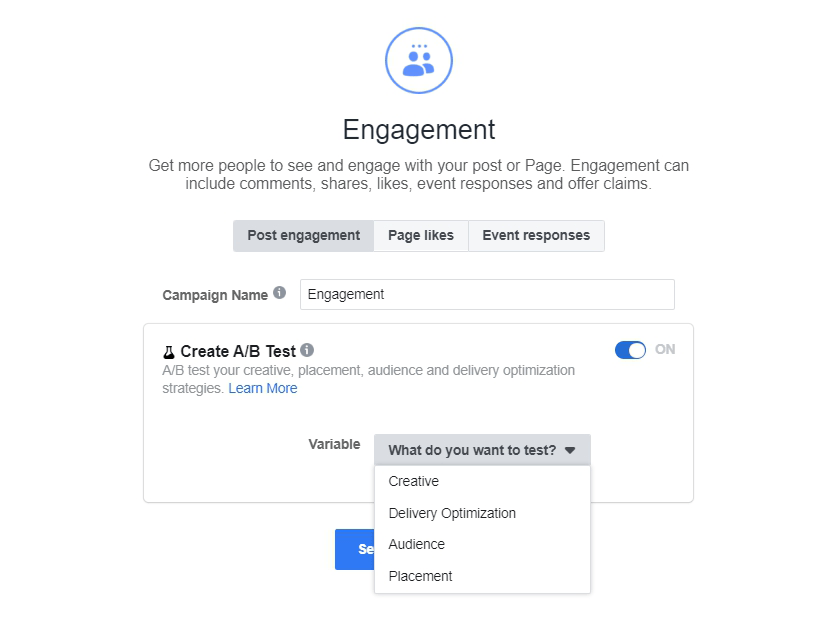

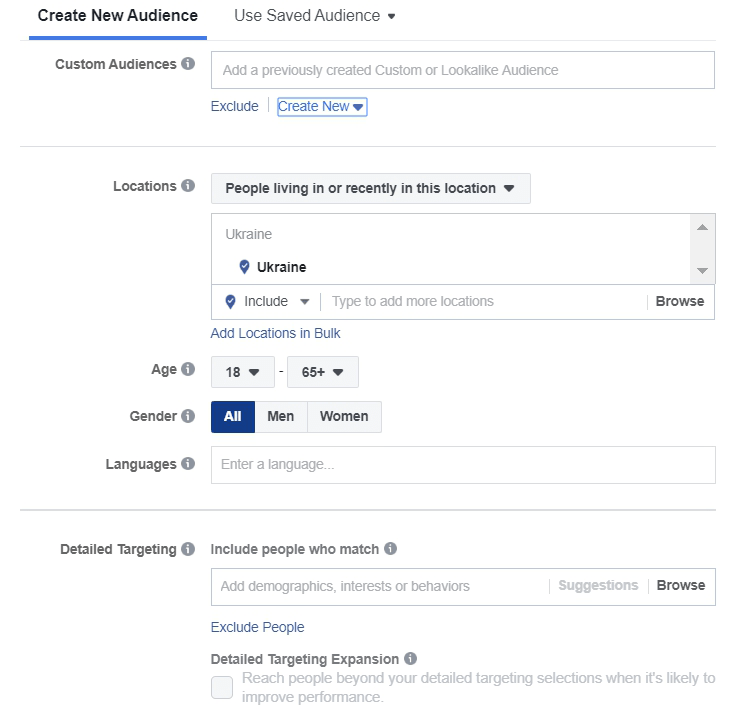

With the latest update, split testing can be selected through the simple opt-in in a section, where you select the ad campaign’s objective.

Next, you’ll see the added options in the campaign settings. The most important new thing is the window for selecting a variable.

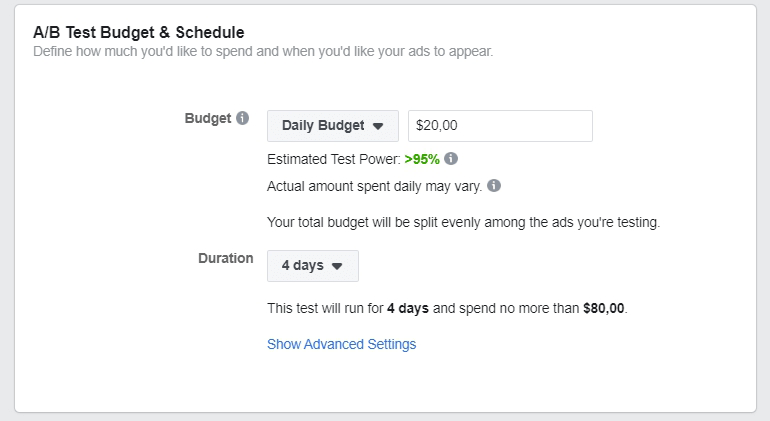

The further settings are specific to the selected variable. In addition, you can configure the budget allocation between the tested ad sets. The budget is divided into equal parts by default.

While working with splits, it is important to remember the “test sensitivity”. Sensitivity is the probability of detecting a difference between ads, if there are any. The less money you are willing to spend on a test, the less its sensitivity will be. For example, with a daily budget of $ 10, the sensitivity is 62%. Facebook recommends using a budget that allows you to achieve a test sensitivity level of 80%.

What variables can be tested?

The auto split testing provides comparison of variables in 4 categories:

- Creative. If you are not sure, which headline or image can work in your case, this is your option.

![]()

- Placement.

- Audience.

- Delivery optimization. In this section, you can also set the bid cap, or choose between paying for impressions or for clicks.

With the release of the April update, split testing is available for the following campaign objectives: Traffic, App installs, Lead generation, Conversions, Video views, Catalog sales, Reach and Engagement.

Can I edit the ads launched in the split-testing mode?

It would be a bad idea to make changes to the test, that’s already been launched.

Even if you don’t change the variable itself, changing the other settings in the ad set may ruin the ‘clean’ results. Simply put, the choice of the best option will be nonobjective.

For example, you’re testing the different audiences, and then you wish to change something in design. The audience is starting to respond more actively, and you can’t be sure, whether it’s the optimization or a better design.

If you’re changing one ad set anyway, then you must change the second one as well. The discrepancies in the ad sets after the edits may cause the stop of your campaign’s delivery.

There are the slight changes, which are considered tolerable:

- At the ad level, you can fix a small typo.

- At the campaign level, you can edit the budget or the delivery schedule.

How to evaluate the results of a split test?

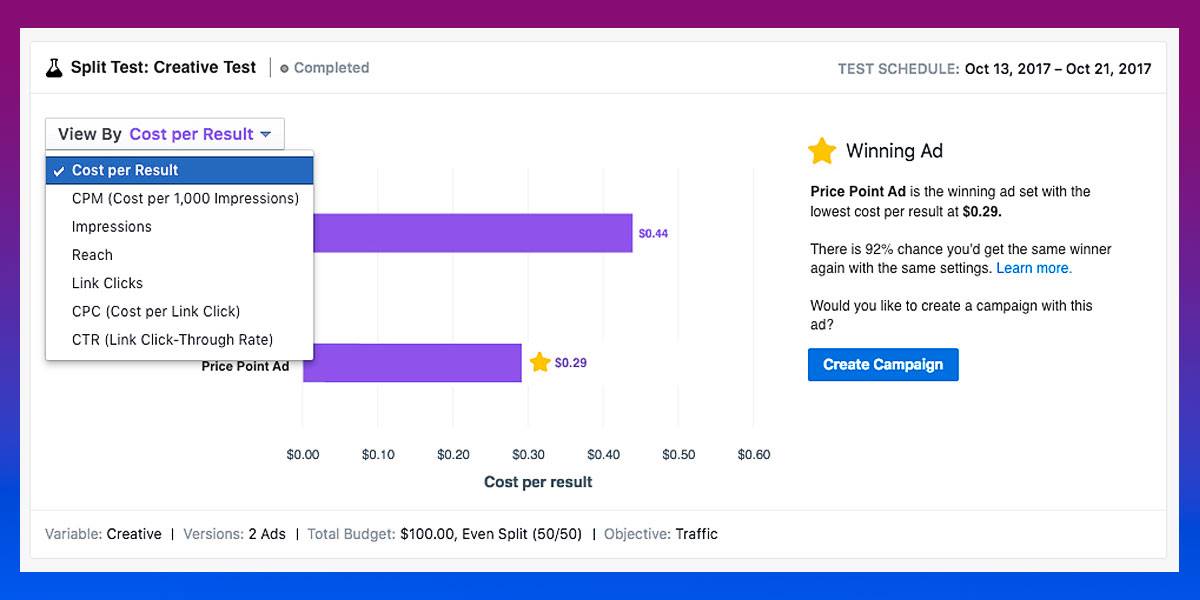

Once the test winner is determined, you receive a notification in Ads Manager and per email.

With the latest update, Facebook has also added a new graph for tracking the performance of the active split tests.

In addition to the winner, you can also see a percentage that represents the chance for you to get the same results in a next test.

The winner of the split test usually has the indicator above 75%. If you run 3, 4, 5 ad sets at the same time, then the percentages below 40%, 35% and 30% (accordingly) mean that all ad sets have the close results without a clear winner.

If your test results show the low confidence, Facebook recommends re-testing with a longer display period or with a higher budget.

As for the budget settings, the system has the following recommendations: split testing usually takes 3 to 14 days, and the recommended budget is above USD 440.

This raises the question, once again. Is it worth using the Facebook tool instead of running the manual tests?

What the Facebook split testing has that the manual testing doesn’t

The main distinguishing feature of the Facebook split-testing is the absence of the overlap in the audience.

The audience of the tested ad sets is different and mutually exclusive. You don’t compete in auctions with yourself, so there’s no increase in the price for the result.

With that, showing different options to the same user may be just what you need. For example, you want a user to see both options and select the winner as an opt-in choice. The tool excludes such possibility: A and B variations are seen by different users with the common characteristics, as specified in the targeting settings.

You can still show different messages to the same user for determining the most effective one on an identical audience. To do this, you have to build a fitting ad campaign structure. You also have to cover a sufficient share of the audience with an optimal budget to ensure a high probability of users seeing both options.

The Median ads team remains a supporter of the manual testing. We will gladly share with you our techniques of constructing such tests in our following articles. Subscribe to our blog and don’t forget to leave us feedback in the comments and messages, so that we can prepare the most relevant information for you.

What do advertisers who actively use split testing say about it?

We’ve done a small survey among advertisers, who were ready to share the experience with the tool.

Here are some thoughts from the specialists, who were kind enough to give us the comments.

Nicholas Lee from the IHS Digital is fully satisfied with the tool:

“For a company, I’m working with, we wanted to test some manual bidding on our best-performing audience. We scheduled the ad sets for 30 days and allocated $600/Day per ad set. We ran the experiment for 7 days and were able to turn on the ads when we felt we had our answer. They don’t trap you into spending money, you still have the option to cancel the experiment whenever you feel you have your answer.”

“Typically when you run 2 ad sets targeting the same audiences you run the risk of competing against yourself in the impression auctions. The tool eliminates that issue by automatically separating the audience so you can run your experiments worry-free,” Nicholas continues.

“I think it depends on the prospective audience size,” says Ted Sanders, a digital marketing specialist from Colorado.”Smaller audiences with the creative testing tool aren’t as effective, however, bigger audiences seem to have better results. My observations are from tests I’ve done for my employer, plus the podcast by Digital Marketer.”

“For me it is useful because I get a good result of a ‘clean’ test/test group, ’cause there is no overlap and so on. And since now, you have a couple of split-test possibilities. So you can test several things before you really start with the big budget. Sometimes, there is not enough budget to work with split-tests, so manual a/b-tests can be quite important,” notes Lars Plettau, who launches ads for the several clients in the fields of automotive and fitness/health.

Peter Kostjukov, Digital Marketing Analyst in Profi.Travel and the author of Targetboy blog, adds to the fear of the increased costs with his comment:

“I tried out the tool in its first version after an initial release and didn’t like it due to the high CPM. Since then, the tool has been updated, but I’m still missing a fitting project to test it on. If we talk pragmatically, I think that such split testing may be a good option only for the e-commerce with a large volume of ads.”

Overall, we’ve also received enough recommendations to run tests manually. “If you know how to split test, do it yourself.” With the manual testing, you get not only an advantage of a lower ad spend but also the convenience in scaling.

Here is what the CEO of Median ads Eugene Mokin adds:

“Initially, split testing was available only in Ads Manager, while it was absent in Power Editor. Now, after their merge, we all go through the suggestion from Facebook to launch a split test in the process of creating an ad campaign. It seems that this tool suits those who have not yet fully learned how to structure the ad campaigns. If you have an understanding of structuring, it’s better to choose the manual testing. It’s not only about the adjustments to the tests. You’ll also be able to start scaling at any time, once you get the appropriate signals.”

In our future articles, we will certainly write about how to build the campaign structures for manual testing. Remember to sign up, stay tuned and don’t miss anything!

If you have found a spelling error, please, notify us by selecting that text and pressing Ctrl+Enter.